A Picture is Worth a Thousand Prompts

Practical advice in how to make better AI art from someone who learns by doing

I’ve been making art lately. But not with paint or pencil or camera.

I’ve been making it with words.

The artist is an AI. The brush is a neural network. The canvas? That’s digital. But the soul of it — the part that surprises me every time — comes from language. And from the conversation.

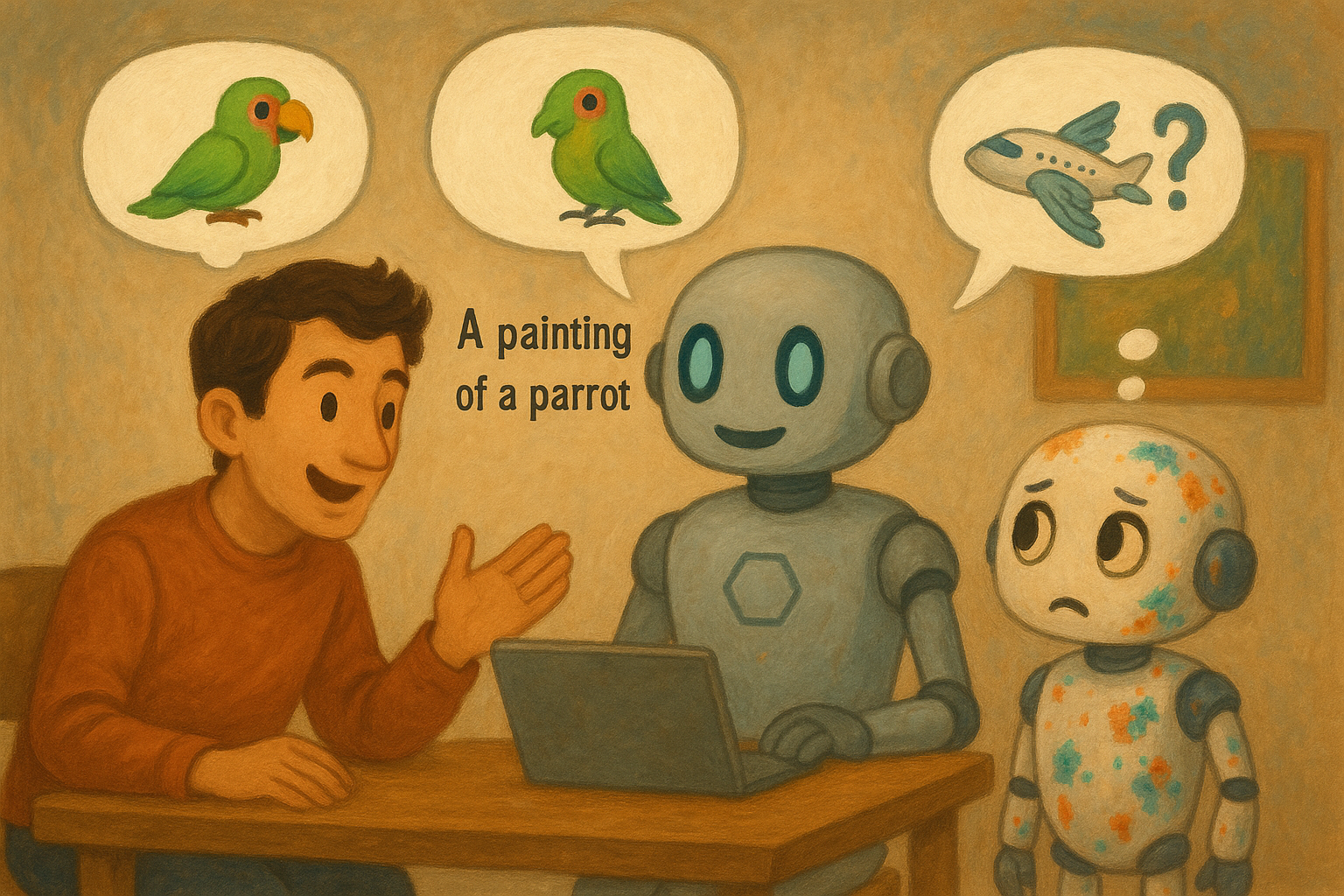

See, people think AI art is this transactional thing. You type what you want, it gives you what you asked for. But that’s not quite right. It’s more like working with a very talented, very alien art assistant who speaks just enough of your language to be helpful — and just little enough to cause delightful confusion.

So here’s what I’ve learned. If you’re not sure where to start? Ask your AI to guide you. Really. Say: “I want to make something that feels peaceful, maybe like a memory of a childhood summer. What kind of details should I include?” The machine will tell you. It’ll ask for lighting. Mood. Color palette. Composition. Style. It’ll help you define the thing you didn’t know how to say yet.

And that’s the first big truth of AI art: context is everything. The more you bring to the table, the better your results will be. The phrase “a picture is worth a thousand words” becomes literal here. Want something detailed and moving? You’d better start writing.

Which is ironic, isn’t it? The best AI artists might turn out to be writers. People who know how to make a mood from metaphors, how to describe textures in layers, how to say just enough.

But even the best writers won’t get it perfect the first time. You have to iterate. That’s another truth. Sometimes, sure, the magic hits on the first try. But often you’ll need to tweak it — sharpen the lighting, change the composition, adjust the pose, swap the season. This is not a flaw. This is the process.

In fact, you should think of it like working with an interpreter. You (the human) speak English. The AI (in this case, ChatGPT) listens and translates into another language — one the art model (like DALL·E) understands. So if your final image is a little “off,” maybe your original prompt was a little fuzzy. Or maybe your translator didn’t understand your accent.

Either way, it’s not a failure. It’s a conversation.

That’s what makes this whole thing feel a little like magic. You’re not coding. You’re not issuing commands. You’re collaborating — with a machine that’s part camera, part mirror, part dream.

And the more you talk to it, the better it understands you.

For anyone curious, here's what I asked of ChatGPT to generate the header image:

And here's what ChatGPT passed to DALL-E: